Photo by Joshua Harris on Unsplash

How to expose multiple applications on Amazon EKS with a single Application Load Balancer

There is an Italian version of this article; if you'd like to read it click here.

Expose microservices to the Internet with AWS

One of the defining moments in building a microservices application is deciding how to expose endpoints so that a client or API can send requests and get responses.

Usually, each microservice has its endpoint. For example, each URL path will point to a different microservice:

www.example.com/service1 > microservice1

www.example.com/service2 > microservice2

www.example.com/service3 > microservice3

...

This type of routing is known as path-based routing.

This approach has the advantage of being low-cost and simple, even when exposing dozens of microservices.

On AWS, both Application Load Balancer (ALB) and Amazon API Gateway support this feature. Therefore, with a single ALB or API Gateway, you can expose microservices running as containers with Amazon EKS or Amazon ECS, or serverless functions with AWS Lambda.

AWS recently proposed a solution to expose EKS orchestrated microservices via an Application Load Balancer. Their solution is based on the use of NodePort exposed by Kubernetes.

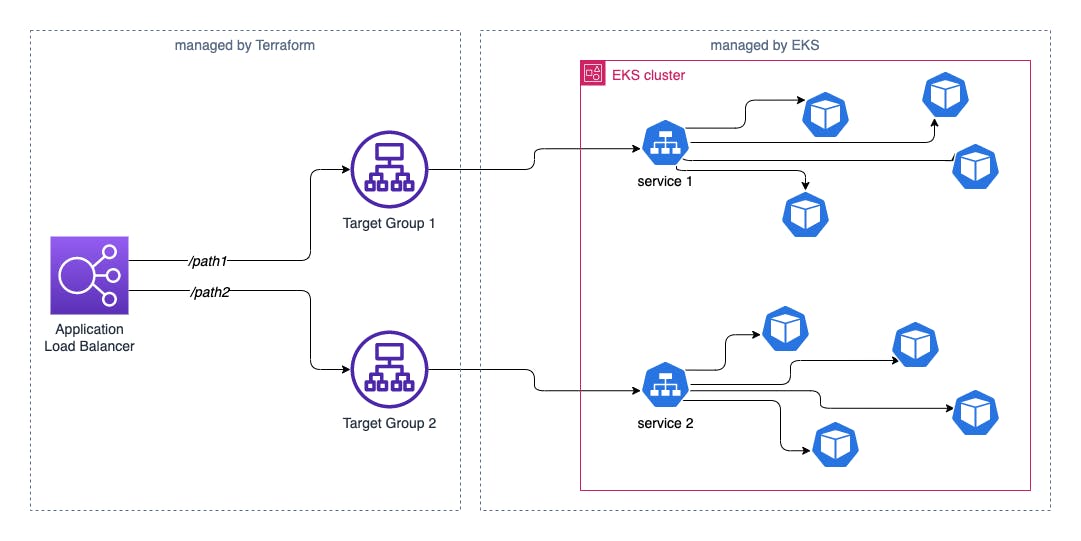

Instead, I want to propose a different solution that uses the EKS cluster VPC CNI add-on and allows the pods to automatically connect to their target group, without using any NodePort.

Also, in my use case, the Application Load Balancer is managed independently of EKS, i.e. it is not Kubernetes that has control over it. This way you can use other types of routing on the load balancer; for example, you could have an SSL certificate with more than one domain (SNI) and base the routing not only on the path but also on the domain.

Component configuration

The code shown here is partial. A complete example can be found here.

EKS cluster

In this article, the EKS cluster is a prerequisite and it is assumed that it is already installed. If you want, you can read how to install an EKS cluster with Terraform in my article on autoscaling. A complete example can be found in my repository.

VPC CNI add-on

The VPC CNI (Container Network Interface) add-on allows you to automatically assign a VPC IP address directly to a pod within the EKS cluster.

Since we want pods to self-register on their target group (which is a resource outside of Kubernetes and inside the VPC), the use of this add-on is imperative. Its installation is natively integrated on EKS, as explained here.

AWS Load Balancer Controller plugin

AWS Load Balancer Controller is a controller that helps manage an Elastic Load Balancer for a Kubernetes cluster.

It is typically used for provisioning an Application Load Balancer, as an Ingress resource, or a Network Load Balancer as a Service resource.

In our case provisioning is not required, because our Application Load Balancer is managed independently. However, we will use another type of component installed by the CRD to make the pods register to their target group.

This plugin is not included in the EKS installation, so it must be installed following the instructions from the AWS documentation.

If you use Terraform, like me, you can consider using a module:

module "load_balancer_controller" {

source = "DNXLabs/eks-lb-controller/aws"

version = "0.6.0"

cluster_identity_oidc_issuer = module.eks_cluster.cluster_oidc_issuer_url

cluster_identity_oidc_issuer_arn = module.eks_cluster.oidc_provider_arn

cluster_name = module.eks_cluster.cluster_id

namespace = "kube-system"

create_namespace = false

}

Load Balancer and Security Group

With Terraform I create an Application Load Balancer in the public subnets of our VPC and its Security Group. The VPC is the same where the EKS cluster is installed.

resource "aws_lb" "alb" {

name = "${local.name}-alb"

internal = false

load_balancer_type = "application"

subnets = module.vpc.public_subnets

enable_deletion_protection = false

security_groups = [aws_security_group.alb.id]

}

resource "aws_security_group" "alb" {

name = "${local.name}-alb-sg"

description = "Allow ALB inbound traffic"

vpc_id = module.vpc.vpc_id

tags = {

"Name" = "${local.name}-alb-sg"

}

ingress {

description = "allowed IPs"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "allowed IPs"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

It is important to remember to authorize this Security Group as a source in the Security Group inbound rules of the cluster nodes.

At this point, I create the target groups to which the pods will bind themselves. In this example I use two:

resource "aws_lb_target_group" "alb_tg1" {

port = 8080

protocol = "HTTP"

target_type = "ip"

vpc_id = module.vpc.vpc_id

tags = {

Name = "${local.name}-tg1"

}

health_check {

path = "/"

}

}

resource "aws_lb_target_group" "alb_tg2" {

port = 9090

protocol = "HTTP"

target_type = "ip"

vpc_id = module.vpc.vpc_id

tags = {

Name = "${local.name}-tg2"

}

health_check {

path = "/"

}

}

The last configuration on the Application Load Balancer is the listeners' definition, which contains the traffic routing rules.

The default rule on listeners, which is the response to requests that do not match any other rules, is to refuse traffic; I enter it as a security measure:

resource "aws_lb_listener" "alb_listener_http" {

load_balancer_arn = aws_lb.alb.arn

port = "80"

protocol = "HTTP"

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "Internal Server Error"

status_code = "500"

}

}

}

resource "aws_lb_listener" "alb_listener_https" {

load_balancer_arn = aws_lb.alb.arn

port = "443"

protocol = "HTTPS"

certificate_arn = aws_acm_certificate.certificate.arn

ssl_policy = "ELBSecurityPolicy-2016-08"

default_action {

type = "fixed-response"

fixed_response {

content_type = "text/plain"

message_body = "Internal Server Error"

status_code = "500"

}

}

}

The actual rules are then associated with the listeners. The listener on port 80 has a simple redirect to the HTTPS listener. The listener on port 443 has rules to route traffic according to the path:

resource "aws_lb_listener_rule" "alb_listener_http_rule_redirect" {

listener_arn = aws_lb_listener.alb_listener_http.arn

priority = 100

action {

type = "redirect"

redirect {

port = "443"

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

condition {

host_header {

values = local.all_domains

}

}

}

resource "aws_lb_listener_rule" "alb_listener_rule_forwarding_path1" {

listener_arn = aws_lb_listener.alb_listener_https.arn

priority = 100

action {

type = "forward"

target_group_arn = aws_lb_target_group.alb_tg1.arn

}

condition {

host_header {

values = local.all_domains

}

}

condition {

path_pattern {

values = [local.path1]

}

}

}

resource "aws_lb_listener_rule" "alb_listener_rule_forwarding_path2" {

listener_arn = aws_lb_listener.alb_listener_https.arn

priority = 101

action {

type = "forward"

target_group_arn = aws_lb_target_group.alb_tg2.arn

}

condition {

host_header {

values = local.all_domains

}

}

condition {

path_pattern {

values = [local.path2]

}

}

}

Getting things work on Kubernetes

Once setup on AWS is complete, using this technique on EKS is super easy! It is sufficient to insert a TargetGroupBinding type resource for each deployment/service we want to expose on the load balancer through the target group.

Let's see an example. Let's say I have a deployment with a service:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: nginx

replicas: 1

template:

metadata:

labels:

app.kubernetes.io/name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app.kubernetes.io/name: nginx

spec:

selector:

app.kubernetes.io/name: nginx

ports:

- port: 8080

targetPort: 80

protocol: TCP

The only configuration I need to add is this:

apiVersion: elbv2.k8s.aws/v1beta1

kind: TargetGroupBinding

metadata:

name: nginx

spec:

serviceRef:

name: nginx

port: 8080

targetGroupARN: "arn:aws:elasticloadbalancing:eu-south-1:123456789012:targetgroup/tf-20220726090605997700000002/a6527ae0e19830d2"

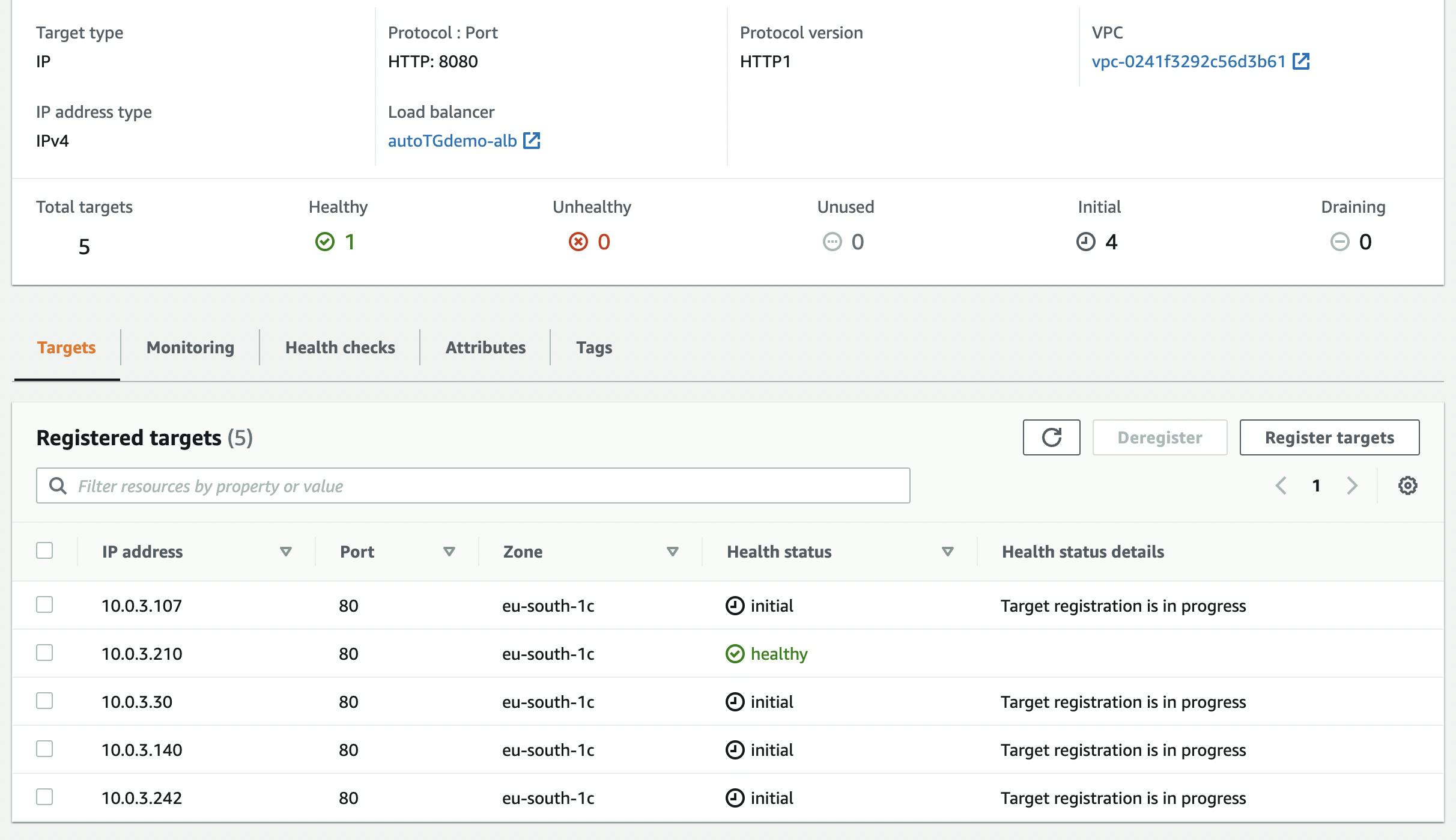

From now on, each new pod that belongs to the deployment associated with that service will self-register on the indicated target group. To test it, just scale the number of replicas:

kubectl scale deployment nginx --replicas 5

and within a few seconds the new pods' IPs will be visible in the target group.