Photo by Nick Fewings on Unsplash

How to expose multiple applications on Google Kubernetes Engine with a single Cloud Load Balancer

There is an Italian version of this article; if you'd like to read it click here.

In my previous article, I talked about how to expose multiple applications hosted on AWS EKS via a single Application Load Balancer.

In this article, we will see how to do the same thing, this time not on AWS but Google Cloud!

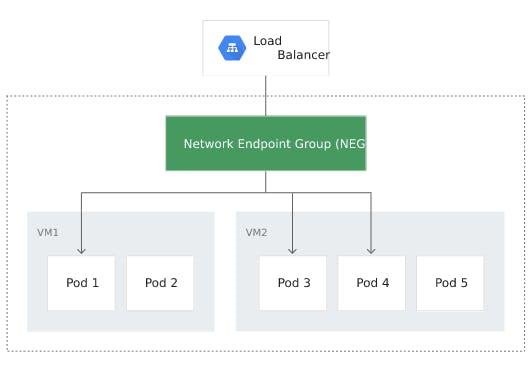

Network Endpoint Group and Container-native load balancing

On GCP, configurations called Network Endpoint Group (NEG) are used to specify a group of endpoints or backend services. A common use case using NEGs is deploying services in containers using them as a backend for some load balancers.

Container-native load balancing uses GCE_VM_IP_PORT NEGs (where NEG endpoints are pod IP addresses) and allows the load balancer to target pods, and distribute traffic among them directly.

Commonly, container-native load balancing is used for the Ingress GKE resource. In that case, the ingress-controller takes care of creating all the necessary resource chain, including the load balancer; this means that each application on GKE corresponds to an Ingress and consequently a load balancer.

Without using the ingress-controller, GCP allows you to create autonomous NEGs; in that case, you have to act manually, and you lose the advantages of the elasticity and speed of a cloud-native architecture.

To summarize: in my use case, I want to use a single load balancer, configured independently from GKE, and have traffic routed to different GKE applications, depending on the rules established by my architecture; and, at the same time, I want to take advantage of cloud-native automatisms without making manual configuration updating operations.

AWS ALB vs GCP Load Balancing

Realizing the same use case on two different cloud providers, the most noteworthy difference is in the "boundary" that Kubernetes reaches in managing resources; or, if we want to look at things from the other point of view, in the configurations that must be prepared on the cloud provider (manually or, as we will see, with Terraform).

In the article about EKS, on AWS I configured, in addition to the ALB, the target groups, one for each application to be exposed; these target groups were created as "empty boxes". Subsequently, I created the deployments and their related services on EKS; finally, I made a TargetGroupBinding configuration (lb-controller custom resource) to indicate to the pods belonging to a specific service which was the correct target group to register with.

In GCP, the Backend Service resource (which can be roughly assimilated to an AWS target group) cannot be created as an "empty box", but since its creation it needs to know its targets to forward traffic to. As I said before, in my use case the targets are the NEGs that GKE automatically generates when a Kubernetes service is created; consequently, I will create these services at the same time as the infrastructure (they will be my "empty boxes"), and I will only manage the application deployments separately.

This apparent difference is purely operational: it is just a matter of configuring the Kubernetes service with different tools, and it can be noteworthy if the configuration of the cloud resources (for example, with Terraform) is made by a different team than the one that deploys the applications in the cluster.

From a functional point of view, the two solutions are exactly equivalent.

The other difference is that in GKE the VPC IP addresses to be assigned to the pods are managed natively, and they do not require any add-on, while on EKS the VPC CNI plugin or other similar third-party plugins must be used.

Component configuration

GKE cluster and network configuration are considered a prerequisite and will not be covered here. The code shown here is partial; a complete example can be found here.

Kubernetes Services

In this example I create two different applications, represented by Nginx and by Apache, to show traffic routing on two different endpoints.

With Terraform I create the Kubernetes services related to the two applications; the use of annotations allows the automatic creation of NEGs:

resource "kubernetes_service" "apache" {

metadata {

name = "apache"

namespace = local.namespace

annotations = {

"cloud.google.com/neg" = "{\"exposed_ports\": {\"80\":{\"name\": \"${local.neg_name_apache}\"}}}"

"cloud.google.com/neg-status" = jsonencode(

{

network_endpoint_groups = {

"80" = local.neg_name_apache

}

zones = data.google_compute_zones.available.names

}

)

}

}

spec {

port {

name = "http"

protocol = "TCP"

port = 80

target_port = "80"

}

selector = {

app = "apache"

}

type = "ClusterIP"

}

}

resource "kubernetes_service" "nginx" {

metadata {

name = "nginx"

namespace = local.namespace

annotations = {

"cloud.google.com/neg" = "{\"exposed_ports\": {\"80\":{\"name\": \"${local.neg_name_nginx}\"}}}"

"cloud.google.com/neg-status" = jsonencode(

{

network_endpoint_groups = {

"80" = local.neg_name_nginx

}

zones = data.google_compute_zones.available.names

}

)

}

}

spec {

port {

name = "http"

protocol = "TCP"

port = 80

target_port = "80"

}

selector = {

app = "nginx"

}

type = "ClusterIP"

}

}

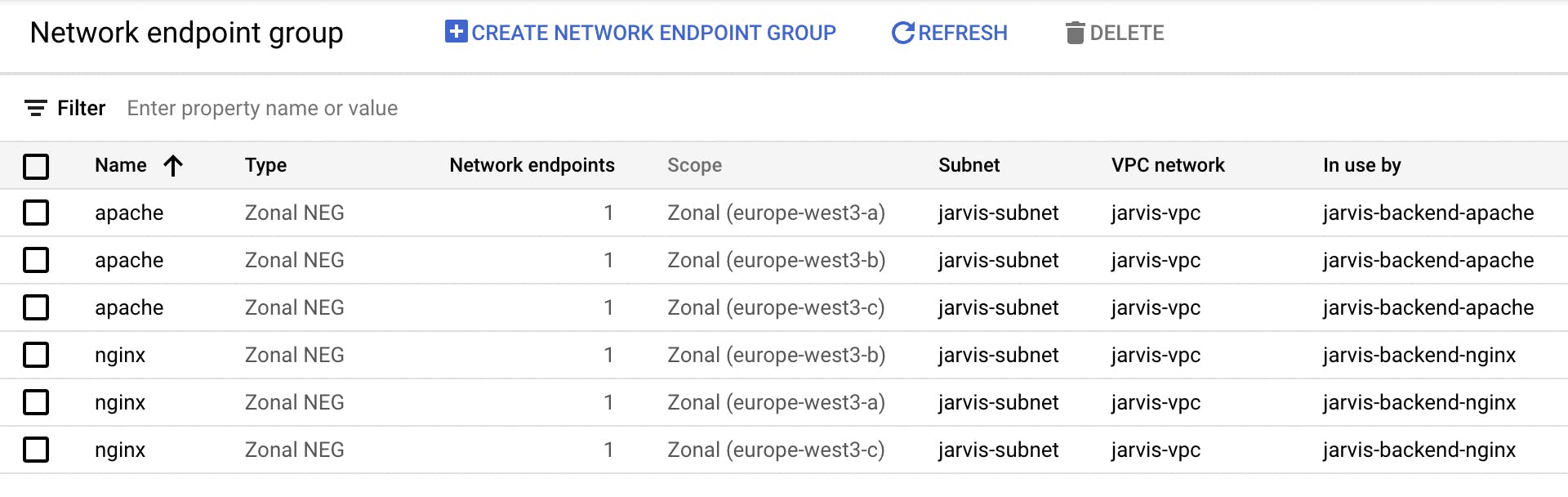

NEG

NEG links always have the same structure, so it's easy to build a list:

locals {

neg_name_apache = "apache"

neg_apache = formatlist("https://www.googleapis.com/compute/v1/projects/%s/zones/%s/networkEndpointGroups/%s", module.enabled_google_apis.project_id, data.google_compute_zones.available.names, local.neg_name_apache)

neg_name_nginx = "nginx"

neg_nginx = formatlist("https://www.googleapis.com/compute/v1/projects/%s/zones/%s/networkEndpointGroups/%s", module.enabled_google_apis.project_id, data.google_compute_zones.available.names, local.neg_name_nginx)

}

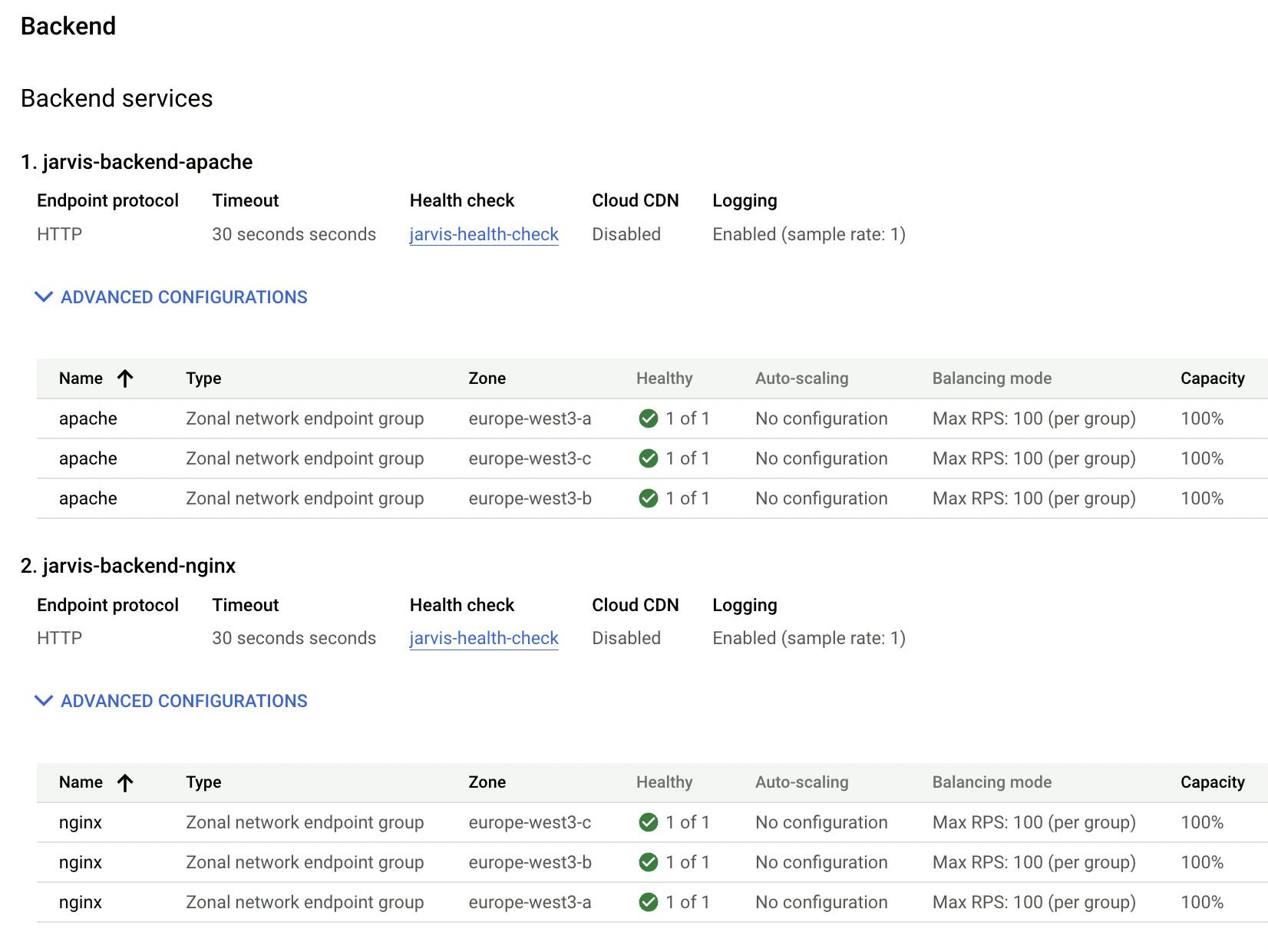

Backend

At this point it is easy to create the backend services:

resource "google_compute_backend_service" "backend_apache" {

name = "${local.name}-backend-apache"

dynamic "backend" {

for_each = local.neg_apache

content {

group = backend.value

balancing_mode = "RATE"

max_rate = 100

}

}

...

}

resource "google_compute_backend_service" "backend_nginx" {

name = "${local.name}-backend-nginx"

dynamic "backend" {

for_each = local.neg_nginx

content {

group = backend.value

balancing_mode = "RATE"

max_rate = 100

}

}

...

}

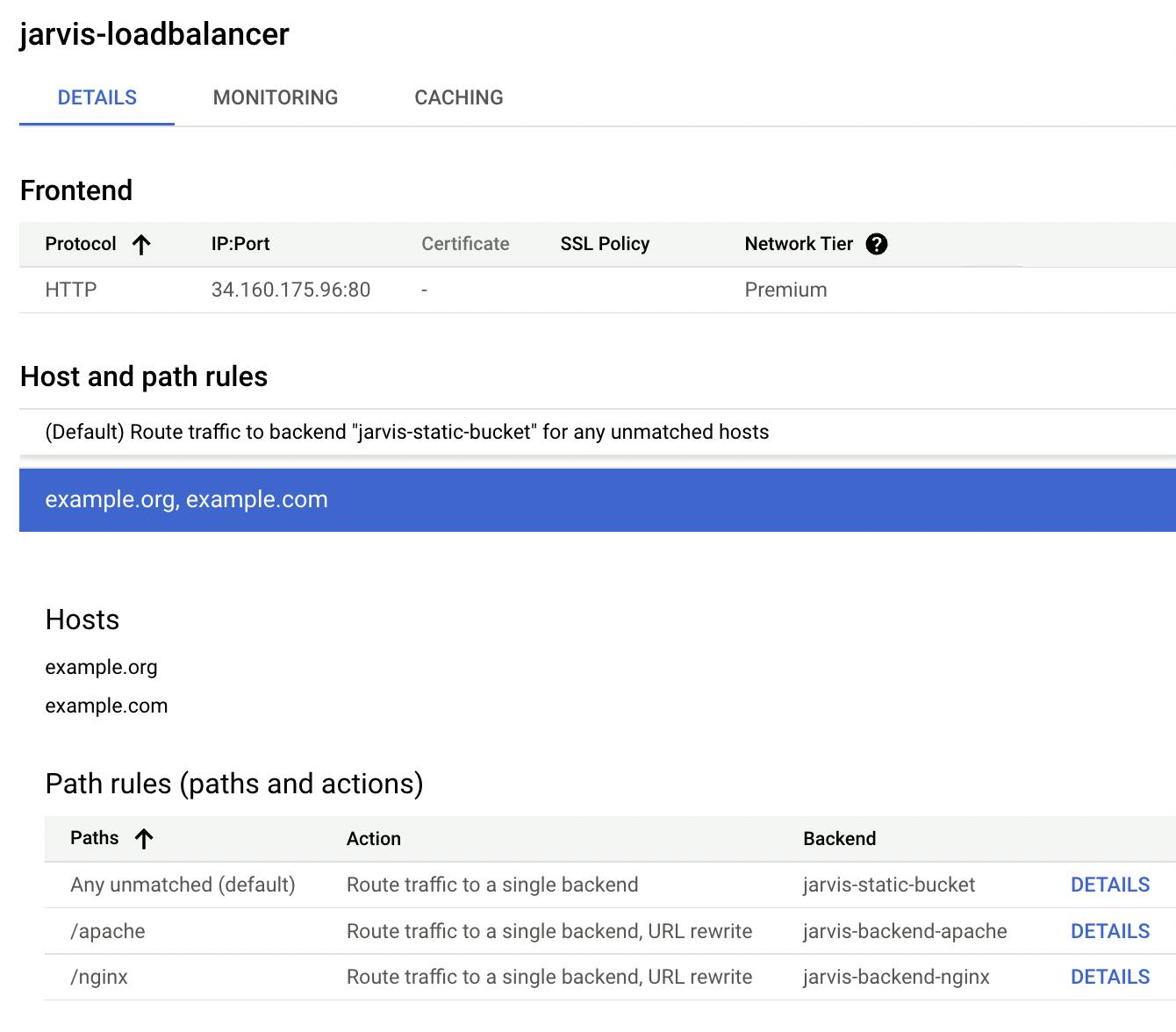

URL Map

I then define the url_map resource, which represents the traffic routing logic. In this example, I use a set of rules that are the same for all domains to which my load balancer responds, and I address the traffic according to the path; you can customize the routing rules following the documentation.

resource "google_compute_url_map" "http_url_map" {

project = module.enabled_google_apis.project_id

name = "${local.name}-loadbalancer"

default_service = google_compute_backend_bucket.static_site.id

host_rule {

hosts = local.domains

path_matcher = "all"

}

path_matcher {

name = "all"

default_service = google_compute_backend_bucket.static_site.id

path_rule {

paths = ["/apache"]

route_action {

url_rewrite {

path_prefix_rewrite = "/"

}

}

service = google_compute_backend_service.backend_apache.id

}

path_rule {

paths = ["/nginx"]

route_action {

url_rewrite {

path_prefix_rewrite = "/"

}

}

service = google_compute_backend_service.backend_nginx.id

}

}

}

Putting it all together

Finally, the resources that bind the created components together are a target_http_proxy and a global_forwarding_rule:

resource "google_compute_target_http_proxy" "http_proxy" {

project = module.enabled_google_apis.project_id

name = "http-proxy"

url_map = google_compute_url_map.http_url_map.self_link

}

resource "google_compute_global_forwarding_rule" "http_fw_rule" {

project = module.enabled_google_apis.project_id

name = "http-fw-rule"

port_range = 80

target = google_compute_target_http_proxy.http_proxy.self_link

load_balancing_scheme = "EXTERNAL"

ip_address = google_compute_global_address.ext_lb_ip.address

}

Use on Kubernetes

Once set up on GCP is complete, using this technique on GKE is even easier than on EKS. It is sufficient to insert a deployment resource that corresponds to the service already created on the load balancer:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: apache

labels:

app: apache

spec:

selector:

matchLabels:

app: apache

strategy:

type: Recreate

replicas: 3

template:

metadata:

labels:

app: apache

spec:

containers:

- name: httpd

image: httpd:2.4

ports:

- containerPort: 80

From now on, each new pod that refers to the deployment associated with that service will automatically be associated with its NEG. To test it, just scale the number of replicas of the deployment:

kubectl scale deployment nginx --replicas 5

and within a few seconds the new pods will be present as a target of the NEG.

Thanks to Cristian Conte for contributing with his GCP knowledge!