Transforming Diagrams into Code: AI-Powered IaC with Claude 3 and Amazon Bedrock

Why Rapid Prototyping Matters

As a Cloud Architect, I often find myself in need of rapid prototyping for various PoC projects. During the brainstorming phase, it's common to rely on infrastructural diagrams to visualize the project's architecture. However, translating these diagrams into Infrastructure as Code (IaC) can be time-consuming.

Tools like Amazon CodeWhisperer have been instrumental in speeding up this task by assisting in the rapid generation of code directly within the IDE - especially with IaC, given its relatively standard nature. But what if there were an even faster way?

Recently, Anthropic unveiled its latest innovation: Claude 3, now part of the models offered by Amazon Bedrock. Claude 3's most notable advancement is its ability to interpret images. This functionality introduces a fascinating possibility: what if you could transform a brainstorming session's infrastructure diagram directly into IaC, and deploy the code immediately?

Why I find this idea interesting? Because this approach not only democratizes the development process by making it accessible to those with varying levels of programming expertise, but also serves as a powerful tool for skill development. This method facilitates a hands-on learning experience, allowing team members to see the immediate translation of visual designs into executable code. As a result, it provides an opportunity for individuals to quickly grasp coding concepts and practices, reinforcing their understanding of infrastructure design and its coding implications. By simplifying the entry point to IaC, it encourages a broader participation in the development process and fosters a culture of continuous learning and skill enhancement within teams.

In order to prove my point, this idea need to be prototyped rapidly too. Let's see how to do it!

Reading Images with Amazon Bedrock and Claude 3 Sonnet

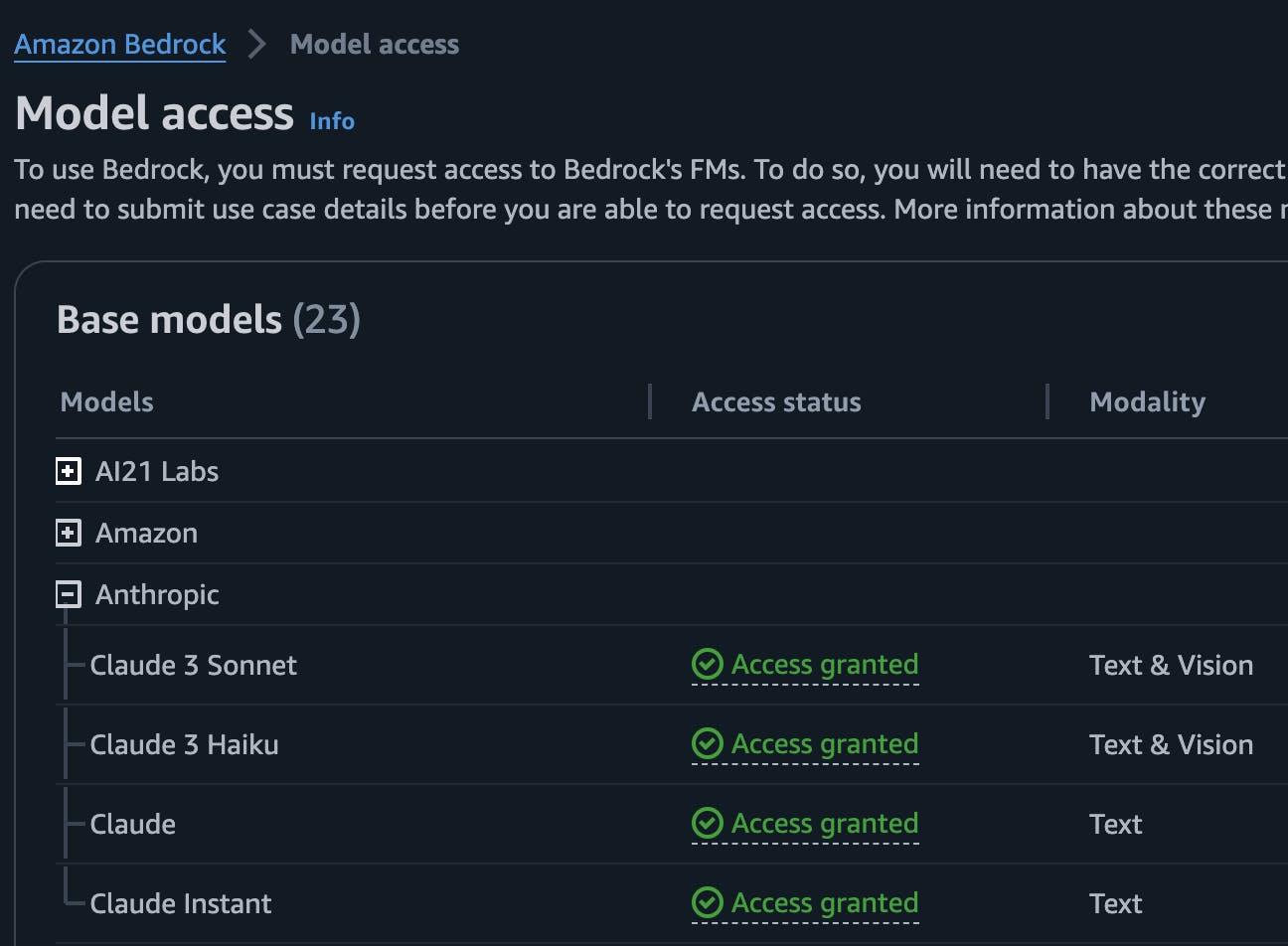

First up, make sure you have an AWS account ready with Anthropic Claude 3 Sonnet model enabled. Check out the guide on AWS for detailed instructions.

Let's start coding (all the examples are in Python) to interact with Amazon Bedrock.

The first function encodes an image to base64. This is necessary for images uploaded by the user, preparing them for processing. The function takes a string representing the path to the uploaded image and converts it into a base64-encoded string.

import os

import base64

import io

from PIL import Image

def image_base64_encoder(image_name):

open_image = Image.open(image_name)

image_bytes = io.BytesIO()

open_image.save(image_bytes, format=open_image.format)

image_bytes = image_bytes.getvalue()

image_base64 = base64.b64encode(image_bytes).decode('utf-8')

file_type = f"image/{open_image.format.lower()}"

return file_type, image_base64

The second function calls on Amazon Bedrock for an image-to-text transformation with Claude 3 Sonnet. This is particularly flexible as it can handle both image and text inputs. In scenarios where the user doesn't provide any text, the function will include a default prompt. Claude 3 requires text input, so this ensures compatibility with its expectations.

def image_to_text(image_name, text) -> str:

file_type, image_base64 = image_base64_encoder(image_name)

system_prompt = """Describe every detail you can about this image, be extremely thorough and detail even the most minute aspects of the image.

If a more specific question is presented by the user, make sure to prioritize that answer.

"""

if text == "":

text = "Use the system prompt"

prompt = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 10000,

"temperature": 0.5,

"system": system_prompt,

"messages": [

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": file_type,

"data": image_base64

}

},

{

"type": "text",

"text": text

}

]

}

]

}

json_prompt = json.dumps(prompt)

response = bedrock.invoke_model(body=json_prompt, modelId="anthropic.claude-3-sonnet-20240229-v1:0",

accept="application/json", contentType="application/json")

response_body = json.loads(response.get('body').read())

llm_output = response_body['content'][0]['text']

return llm_output

It almost seems too good to be true, but that's it! This code is all you need to interact with Amazon Bedrock and use the Claude 3 Sonnet model. Now, you can just run this code and pass an image to it, generating CDK code to be executed right away. In my case, since I'd like to make it easily accessible to friends and collegues without any hassle, a nice web interface could be handy.

Crafting Interfaces with Ease with Streamlit

In a context of PoCs and rapid prototyping, web interfaces also need to be quick to create. Let's just say my frontend skills are more or less up to this (which is not mine, but I find it truly perfect 😅).

To rapidly build a web interface I used Streamlit, a cool open-source Python library perfect for those of us who aren’t UI wizards. With just a few lines of Python code, you can automatically generate web app interfaces without messing with UI design; it's ideal for PoCs where the interface doesn't have to match the company brand perfectly but the goal is to quickly create a working example.

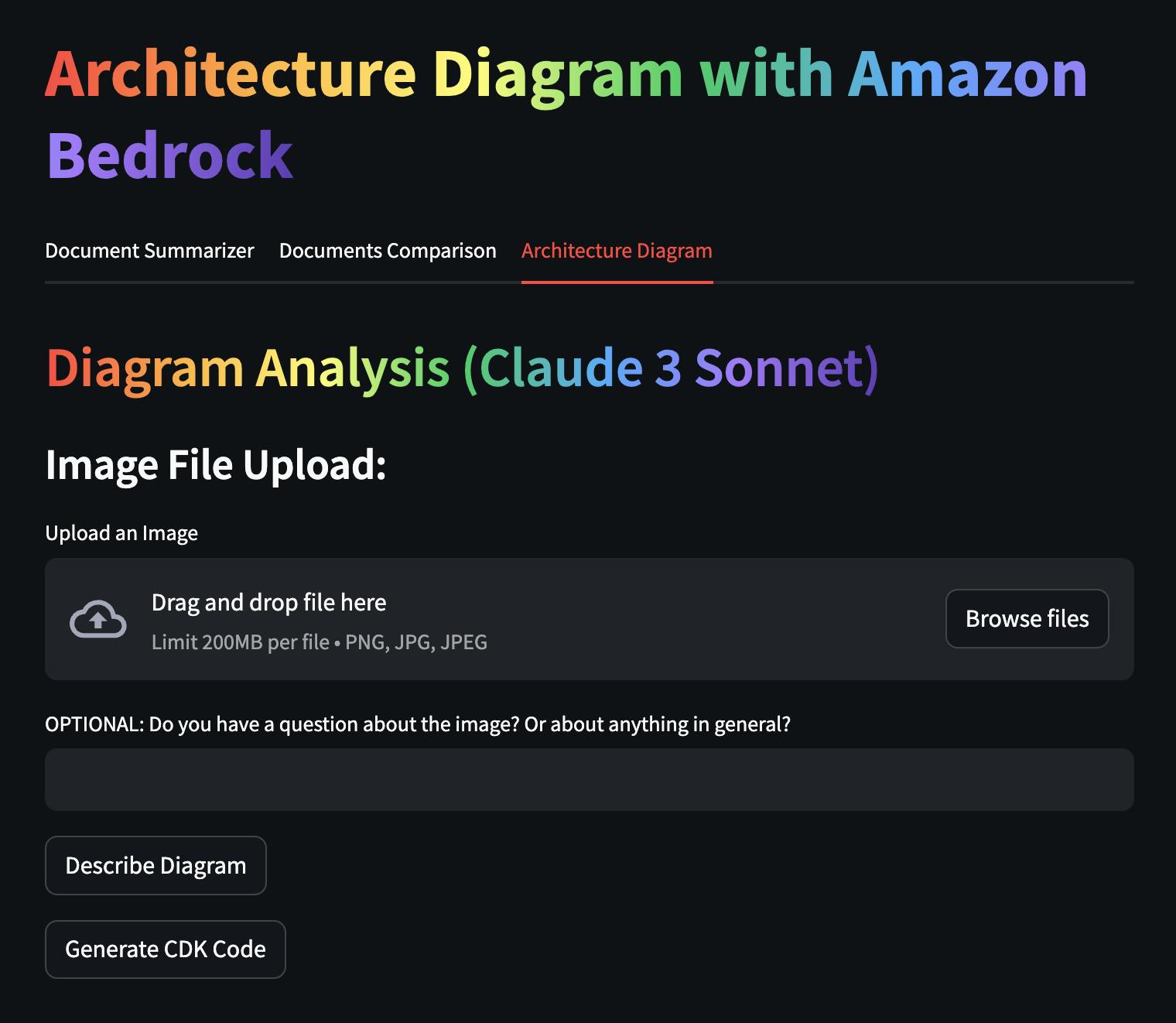

In this app.py file you see how we create a visually appealing page with just a few lines of code. This page includes an input for uploading our architectural diagram image, an optional text field for any specific requests, and two buttons. One button generates a detailed description of the image (useful for documentation, for example), and the other creates the corresponding CDK code based on the diagram.

import streamlit as st

st.title(f""":rainbow[Architecture Diagrams with Amazon Bedrock]""")

st.header(f""":rainbow[Diagram Analysis (Claude 3 Sonnet)]""")

with st.container():

st.subheader('Image File Upload:')

File = st.file_uploader('Upload an Image', type=["png", "jpg", "jpeg"], key="diag")

text = st.text_input("OPTIONAL: Do you have a question about the image? Or about anything in general?")

result1 = st.button("Describe Diagram")

result2 = st.button("Generate CDK Code")

When you press a button, it runs the function we wrote earlier, using a given prompt:

if result1:

input_text = "You are a AWS solution architect. The image provided is an architecture diagram. Explain the technical data flow in detail. Do not use preambles."

elif result2:

input_text = "You are a AWS solution architect. The image provided is an architecture diagram. Provide cdk python code to implement using aws-cdk-lib in detail."

st.write(image_to_text(File.name, input_text))

Once you've set up a virtual environment, activated it, installed the dependencies from requirements.txt (and created a .env file to set your AWS CLI profile), your application will be all set. To launch the application and its basic frontend, just run this command in your terminal:

streamlit run app.py

You'll have a web interface ready to use, that looks like this:

Wrapping Up

In just a short time, we've put together a working app with Streamlit, which was pretty straightforward. We also saw how Claude 3 Sonnet can quickly give us a detailed description of a diagram or churn out working code.

Remember, though, like any generative AI, it might give you different results if you try again. You might get something better or something that needs a bit more tweaking. That's what the text field in our demo app is for - adding more details to get those results just right.

To wrap up, here you can find the full code example, and another link to a repository where AWS offers plenty of quick-start examples for getting to grips with Bedrock. Explore these resources to expand your skills and start building your own solutions with the power of Streamlit and AWS Bedrock.

Happy coding!